Note

Click here to download the full example code

Emcee and the Model Interface¶

import corner

import matplotlib.pyplot as plt

import numpy as np

import lmfit

Set up a double-exponential function and create a Model

Generate some fake data from the model with added noise

truths = (3.0, 2.0, -5.0, 10.0)

x = np.linspace(1, 10, 250)

np.random.seed(0)

y = double_exp(x, *truths)+0.1*np.random.randn(x.size)

Create model parameters and give them initial values

p = model.make_params(a1=4, t1=3, a2=4, t2=3)

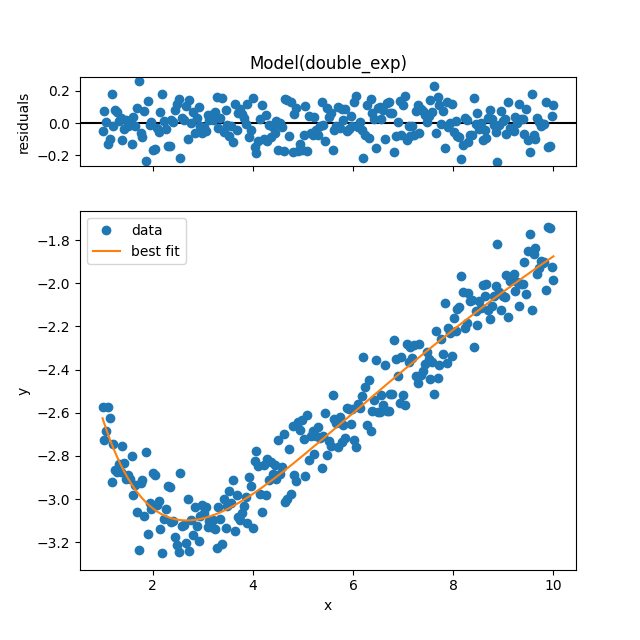

Fit the model using a traditional minimizer, and show the output:

Out:

/Users/Newville/Codes/lmfit-py/examples/example_emcee_Model_interface.py:16: RuntimeWarning: overflow encountered in exp

return a1*np.exp(-x/t1) + a2*np.exp(-(x-0.1) / t2)

/Users/Newville/Codes/lmfit-py/examples/example_emcee_Model_interface.py:16: RuntimeWarning: overflow encountered in multiply

return a1*np.exp(-x/t1) + a2*np.exp(-(x-0.1) / t2)

/Users/Newville/Codes/lmfit-py/lmfit/minimizer.py:173: RuntimeWarning: overflow encountered in multiply

return (r*r).sum()

[[Fit Statistics]]

# fitting method = Nelder-Mead

# function evals = 609

# data points = 250

# variables = 4

chi-square = 2.33333982

reduced chi-square = 0.00948512

Akaike info crit = -1160.54007

Bayesian info crit = -1146.45423

[[Variables]]

a1: 2.98623689 +/- 0.15010519 (5.03%) (init = 4)

t1: 1.30993186 +/- 0.13449652 (10.27%) (init = 3)

a2: -4.33525597 +/- 0.11765819 (2.71%) (init = 4)

t2: 11.8099125 +/- 0.47172590 (3.99%) (init = 3)

[[Correlations]] (unreported correlations are < 0.100)

C(a2, t2) = 0.988

C(t1, a2) = -0.928

C(t1, t2) = -0.885

C(a1, t1) = -0.609

C(a1, a2) = 0.297

C(a1, t2) = 0.232

(<Figure size 640x640 with 2 Axes>, GridSpec(2, 1, height_ratios=[1, 4]))

Calculate parameter covariance using emcee:

start the walkers out at the best-fit values

set is_weighted to False to estimate the noise weights

set some sensible priors on the uncertainty to keep the MCMC in check

run the MCMC algorithm and show the results:

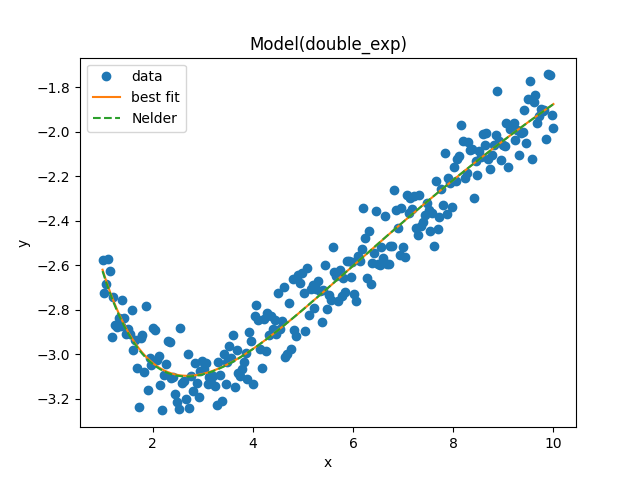

result_emcee = model.fit(data=y, x=x, params=emcee_params, method='emcee',

nan_policy='omit', fit_kws=emcee_kws)

lmfit.report_fit(result_emcee)

ax = plt.plot(x, model.eval(params=result.params, x=x), label='Nelder', zorder=100)

result_emcee.plot_fit(ax=ax, data_kws=dict(color='gray', markersize=2))

plt.show()

Out:

The chain is shorter than 50 times the integrated autocorrelation time for 5 parameter(s). Use this estimate with caution and run a longer chain!

N/50 = 20;

tau: [42.86236444 45.34591993 45.49852485 45.00527243 38.05671107]

[[Fit Statistics]]

# fitting method = emcee

# function evals = 100000

# data points = 250

# variables = 5

chi-square = 245.135584

reduced chi-square = 1.00055340

Akaike info crit = 5.08763609

Bayesian info crit = 22.6949407

[[Variables]]

a1: 2.99729292 +/- 0.14788788 (4.93%) (init = 2.986237)

t1: 1.31860631 +/- 0.14157651 (10.74%) (init = 1.309932)

a2: -4.34320377 +/- 0.12457281 (2.87%) (init = -4.335256)

t2: 11.7995440 +/- 0.49140460 (4.16%) (init = 11.80991)

__lnsigma: -2.32707117 +/- 0.04573634 (1.97%) (init = -2.302585)

[[Correlations]] (unreported correlations are < 0.100)

C(a2, t2) = 0.982

C(t1, a2) = -0.933

C(t1, t2) = -0.888

C(a1, t1) = -0.567

C(a1, a2) = 0.271

C(a1, t2) = 0.230

/Users/Newville/Codes/lmfit-py/examples/example_emcee_Model_interface.py:60: UserWarning: Matplotlib is currently using agg, which is a non-GUI backend, so cannot show the figure.

plt.show()

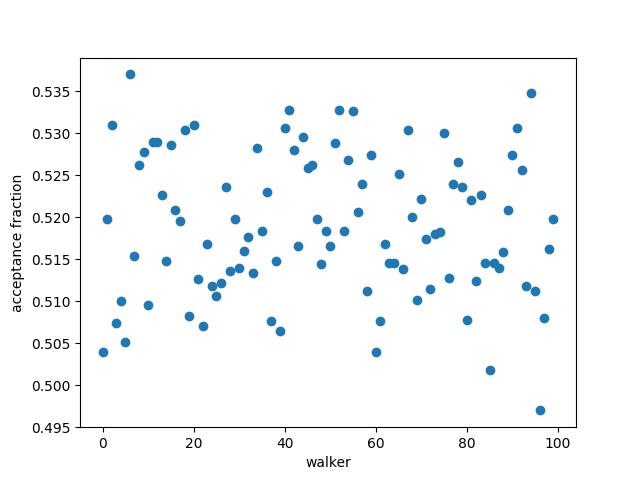

check the acceptance fraction to see whether emcee performed well

plt.plot(result_emcee.acceptance_fraction)

plt.xlabel('walker')

plt.ylabel('acceptance fraction')

plt.show()

Out:

/Users/Newville/Codes/lmfit-py/examples/example_emcee_Model_interface.py:67: UserWarning: Matplotlib is currently using agg, which is a non-GUI backend, so cannot show the figure.

plt.show()

try to compute the autocorrelation time

if hasattr(result_emcee, "acor"):

print("Autocorrelation time for the parameters:")

print("----------------------------------------")

for i, p in enumerate(result.params):

print(p, result.acor[i])

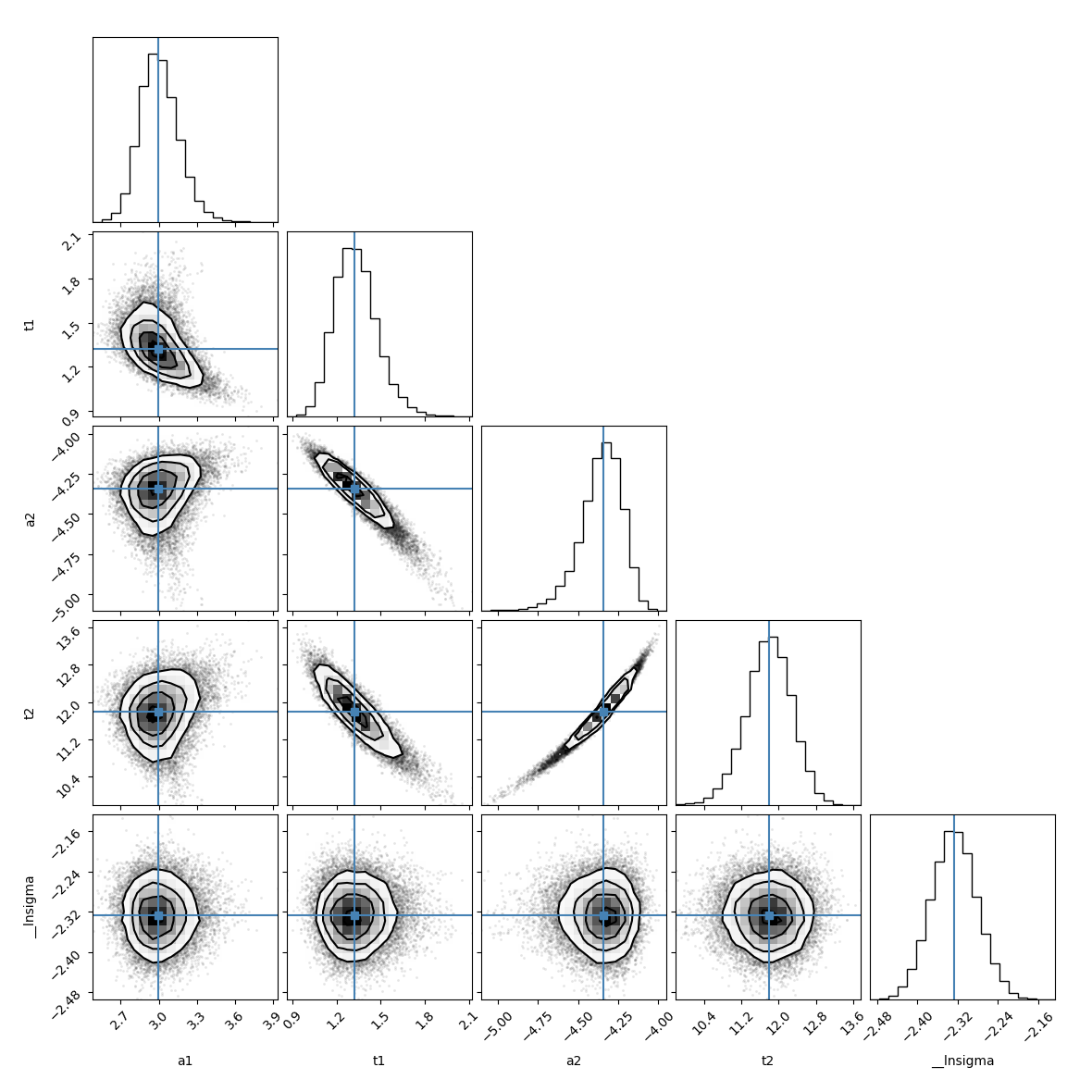

Plot the parameter covariances returned by emcee using corner

emcee_corner = corner.corner(result_emcee.flatchain, labels=result_emcee.var_names,

truths=list(result_emcee.params.valuesdict().values()))

print("\nmedian of posterior probability distribution")

print('--------------------------------------------')

lmfit.report_fit(result_emcee.params)

# find the maximum likelihood solution

highest_prob = np.argmax(result_emcee.lnprob)

hp_loc = np.unravel_index(highest_prob, result_emcee.lnprob.shape)

mle_soln = result_emcee.chain[hp_loc]

print("\nMaximum likelihood Estimation")

print('-----------------------------')

for ix, param in enumerate(emcee_params):

print(param + ': ' + str(mle_soln[ix]))

quantiles = np.percentile(result_emcee.flatchain['t1'], [2.28, 15.9, 50, 84.2, 97.7])

print("\n\n1 sigma spread", 0.5 * (quantiles[3] - quantiles[1]))

print("2 sigma spread", 0.5 * (quantiles[4] - quantiles[0]))

Out:

median of posterior probability distribution

--------------------------------------------

[[Variables]]

a1: 2.99729292 +/- 0.14788788 (4.93%) (init = 2.986237)

t1: 1.31860631 +/- 0.14157651 (10.74%) (init = 1.309932)

a2: -4.34320377 +/- 0.12457281 (2.87%) (init = -4.335256)

t2: 11.7995440 +/- 0.49140460 (4.16%) (init = 11.80991)

__lnsigma: -2.32707117 +/- 0.04573634 (1.97%) (init = -2.302585)

[[Correlations]] (unreported correlations are < 0.100)

C(a2, t2) = 0.982

C(t1, a2) = -0.933

C(t1, t2) = -0.888

C(a1, t1) = -0.567

C(a1, a2) = 0.271

C(a1, t2) = 0.230

Maximum likelihood Estimation

-----------------------------

a1: 2.9516079567371394

t1: 1.320921622273492

a2: -4.333103563716343

t2: 11.84652506057183

__lnsigma: -2.3328583631734823

1 sigma spread 0.14166142736065634

2 sigma spread 0.28026477066939837

Total running time of the script: ( 0 minutes 11.075 seconds)